Evaluation of Information Systems

Information technology has immense significance in business and other fields and huge amount of money is being spent on this around the globe (Seddon, 2001). It is necessary to evaluate the outcome of information system. In order to direct the process of management information in systems in the right direction, the activity of evaluation provides supervision. Evaluation is considered to be "undertaken as a matter of course in the attempt to gauge how well something meets a particular expectation, objective or *need" (Hirschheim and Smithson 1999). Particularly, from management viewpoint, Willcocks (1992) described that information system evaluation to be the activity of "establishing by quantitative and/or qualitative means the worth of IT to the organization." The consequence of such an assessment can then be used in the decisions of an organization when managing their information systems. Throughout the life cycle of an information system organization has to take important decisions. The most obvious of which are the go/no-go investment decisions.

Evaluations can serve many purposes and these purposes reveal themselves in the evaluations being either formative or summative (Remenyi, Money, and Sherwood-Smith 2000). In formative evaluation, the organization evaluates in order to increase the performance, focusing on the future (ex-ante). Summative evaluations are exclusively executed to observe the quality of past performance (ex-post). Thus, any evaluation should consist of a monitoring and a forecasting aspect. Evaluation of Information System performances means evaluation of performances in hardware, software, computer networks, data and human resources. The main objective of Information System functionality performances evaluation is upgrading and especially improvement in quality of maintenance. Numerous information system evaluation methodologies are proposed in management studies.

Information system evaluation is not a simple task instead it is difficult process that involve a variety of dimensions (Peffers and Saarinen, 2002) and various stakeholders (McAulay et al., 2002). Information system investments often are intangible benefits and the benefits are often realised during a long period of time (Saarinen and Wijnhoven, 1994). Ad-hoc practices for Information system evaluation are normally reported (Irani and Love, 2001) and simple procedure such as payback period, are used in evaluation (Lederer and Mendelow, 1993). Evaluation is an intricate process and consequently there are a lot of suggestions for how to evaluate IT-system. Abundant of literature on evaluation takes a formal-rational view and visualizes evaluation as a largely quantitative process to compute the likely cost/benefit on the basis of defined criteria (Walsham, 1993).

The interpretative approaches of IT-systems often as social systems that have information technology embedded into it (Goldkuhl & Lyytinen, 1982). Peffers and Saarinen (2002) proclaimed that evaluation of IT with reference to financial terms may be biased toward the most easily measured benefits and prone to manipulation to justify predetermined investment decisions, resulting in systematic over or under-investment in IT. Some contingency models to select evaluation methods for information system investments have been described in theoretical literature. At organisational level, contingency factors may include the industry situation (stable or changing), and the leadership role of the organisation (Farbey et al., 1992). At information system project level, contingency factors may include project types, project sizes, the type of expected benefits (qualitative vs. quantifiable), the stages of the system's life cycle, and development and procurement strategy (Farbey et al., 1992).

Evaluation of Information Systems is a significant matter for study as well as practice (Irani et al. 2015). As Information Systems has become more pervasive, complex and interactive evaluation emphasis has, to a degree, shifted to concerns with how and to what extent ISs serve organisational change (Klecun and Cornford 2015). Evaluating the success of Information Systems has been recognised as one of the most critical issues in IS field. Several conceptual and empirical studies have been conducted to discover this issue. An enormous debate continues for the appropriate set of variables that can be used to determine the users' perception of the success of Information systems.

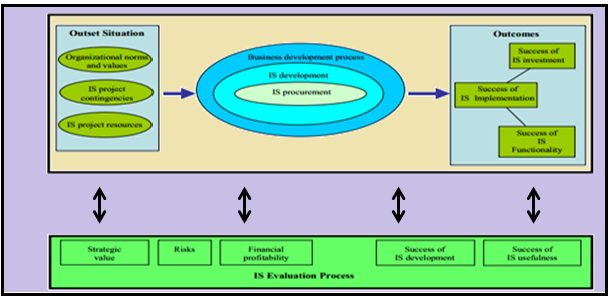

Peffers and Saarinen (2002) divided evaluation criteria into five categories that include Strategic value; Profitability; Risk; Successful Development and Procurement; and Successful Use and Operations. The IS evaluation process should also acclimatize to the possible changes in the assumptions on which Information Systems investment is based, thus in our conceptual framework there is a two way relationship between the IS evaluation process and, business development and IS development processes.

There are three types of strategies for Information system evaluation that include:

- Goal-based evaluation: Goal-based evaluation means that explicit goals from the organisational context drive the evaluation. These goals are used to measure the IT system. According to Patton (1990) goal based evaluation is described as measuring the extent to which a program or intervention has attained clear and specific objectives. The focus is on intended services and outcomes of a program-the goals. Good et al (1986) asserted that evaluations should be measurable and that the evaluation should meet the requirements specification. Many critics disapproved this method because such evaluation concentrates on technical and economic aspects rather than human and social aspects (Hirschheim & Smithson, 1988). Further These theorists further argued that this can have major negative consequences in terms of decreased user satisfaction but also organizational consequences in terms of system value. The basic strategy of this approach is to measure if predefined goals are fulfilled or not; to what extent and in what ways. The approach is deductive. What is measured depends on the character of the goals and a quantitative approach as well as qualitative approach used.

- Goal-free evaluation: The goal-free evaluation means that no such explicit goals are used. Goal-free evaluation is an inductive and situationally driven strategy. The major objective of this interpretive evaluation is to gain thorough understanding of the nature of what is to be evaluated and to create motivation and commitment (Hirschheim and Smithson, 1988). The involvement of array of stakeholder groups is often considered essential to this approach of evaluation. This can also be a practical hindrance where time or resources for the evaluation are short. Patton (1990) uses the term goal-free evaluation. Goal-free evaluation is elaborated as collecting data on a broad array of actual effects and evaluating the importance of these effects in meeting demonstrated needs (Patton, 1990). The evaluator makes a thoughtful attempt to avoid all rhetoric related to program goals; no discussion about goals is held with staff; no program brochures or proposals are read; only the program's outcomes and measurable effects are studied. The aim of goal-free evaluation is to avoid the risk of narrowly studying stated program objectives and thereby missing important unanticipated outcomes, eliminate the negative connotations attached to discovery of unanticipated effect: "The whole language of side-effected or secondary effect or even unanticipated effect tended to be a put-down of what might well be a crucial achievement, especially in terms of new priorities." Another aim is to eliminate the perceptual biases introduced into an evaluation by knowledge of goals and maintain evaluator objectivity and independence through goal-free conditions (Patton, 1990).

- Criteria-based evaluation: Criteria-based evaluation means that some explicit general criteria are used as an evaluation yardstick. There are numerous criteria-based approaches around such as checklists, heuristics, principles or quality ideals. In this approach, the IT-systems interface and/or the interaction between users and IT-systems acts as a basis for the evaluation together with a set of predefined criteria.

Information Systems Evaluation Process

The evaluation process should recognise and control the critical areas of an Information Systems project. Before selecting the evaluation criteria and methods and deciding who would be involved in the evaluation, it is essential to recognise all the relevant interest groups for the Information Systems project (Serafeimidis and Smithson, 2000). A covering set of evaluation criteria should be used to ensure that all dimensions of the Information Systems endeavour are taken into account and assessed. The Information Systems evaluation process must be incorporated into business development process, the Information Systems development process, and the IS procurement process.

Wen and Sylla (1999) recommended a three-step process for Information Systems evaluation:

- Intangible benefits evaluation

- IS investment risk analysis

- Tangible benefits evaluation.

The steps should be taken in this order that is intangible benefits and risks should be evaluated prior to evaluating the tangible benefits. The success of Information Systems development category is placed prior to the success of Information Systems usefulness since the usefulness can only be observed after the IS has been used for a while. Preferably, Information Systems evaluation would all include all categories, but the focus of evaluation is different depending on who conducts the evaluation and where the initiative for the evaluation comes from. According to Farbey et al. (1992), the focus of evaluation changes according to the organisational interests, which may be on a number of levels, such as costs and benefits, organisation's competitive position or industrial relations.

It is argued that whether the organisation's interests are taken into consideration appropriately depends on the knowledge and skills of the evaluator. Therefore, the senior management should cautiously consider who should be involved in the evaluation. The result of the evaluation should be delivered to each person who are associated with the project so that the information received from the evaluation can be employed in the decision making phase. Most likely, the decision itself would be continuing with the investment, changing the specifications, range or implementation method of the system, or 'freezing' the project. In addition, the changes might include schedule changes; reorganisation of the project (project management can be changed); or dealer changes.

Major reasons for these changes may be apparent mistakes, unanticipated problems, a new experience about the project that changes the idea of the right course of action, or changes in the company's environment, that are beyond the company's control. Evaluating the success of an Information Systems implementation should consider at least two dimensions such as the process and the product success (Saarinen, 1993). Evaluating the conduct of the Information Systems development process would enable the learning for future projects. The product achievement includes both the Information Systems functionality and the realisation of the expected benefits from the Information Systems investment.

holistic framework of IS evaluation

To summarize, evaluation of information systems is a complicated task. It is seen to support organizations in taking managerial decisions regarding their systems, but does not seem to be able to keep up with the multifaceted developments in technology.